Some years ago, when I was still married to the idea of a rubric (having been introduced to the concept as a first-year teacher years earlier by a particularly enthusiastic instructional coach), I was rocked into reality while attending a literacy workshop where a "Ministry Person" joked about the "over-rubrification of Ontario".

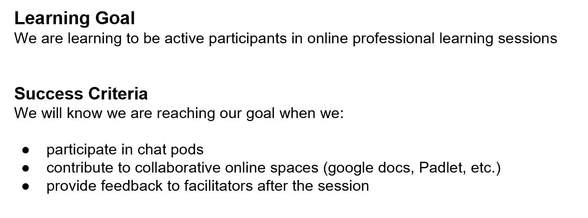

Initially shocked that there might be another way when it came to assessment for learning, I eventually became a convert to the idea of Learning Goals and Success Criteria, and have been working on mastery of using these with descriptive feedback to improve student learning ever since.

Lately, however, I find myself wondering about how an amalgamation of the two might better support both student learning and teacher workload.

"Learning" Goal as Step One

Essential for both a quality rubric and the development of effective success criteria is the defining of an initial goal. What is it that one wants the students to learn, in accordance with one or more overall curriculum expectations, and taking into consideration the student interests and affinities in a particular class?

As a classroom educator, I might express such a goal as, "We are learning to..." followed by some overarching description of the intended learning from the curriculum.

As an Education Officer with the Ministry of Education, I find myself pulling out these skills when considering the ideal candidate for a writing project. As my colleagues and I recently pondered while preparing a memo and call for writers, what, specifically, were we looking for in a group of writers? From this overarching vision, a set of criteria emerged, as they do when we consider how best to describe a curriculum-grounded learning goal.

From Success Criteria to Rubric

Assuming one has developed a clear learning goal, the set of criteria that is developed (or ideally, co-developed with students) to describe said goal are used to provide descriptive feedback to students as they work though the learning. This same set of criteria, once firmed up (criteria may evolve during the formative part of the learning) will also be used by the teacher to evaluate student learning for reporting purposes later on, in the summative phase of the assessment and instruction cycle.

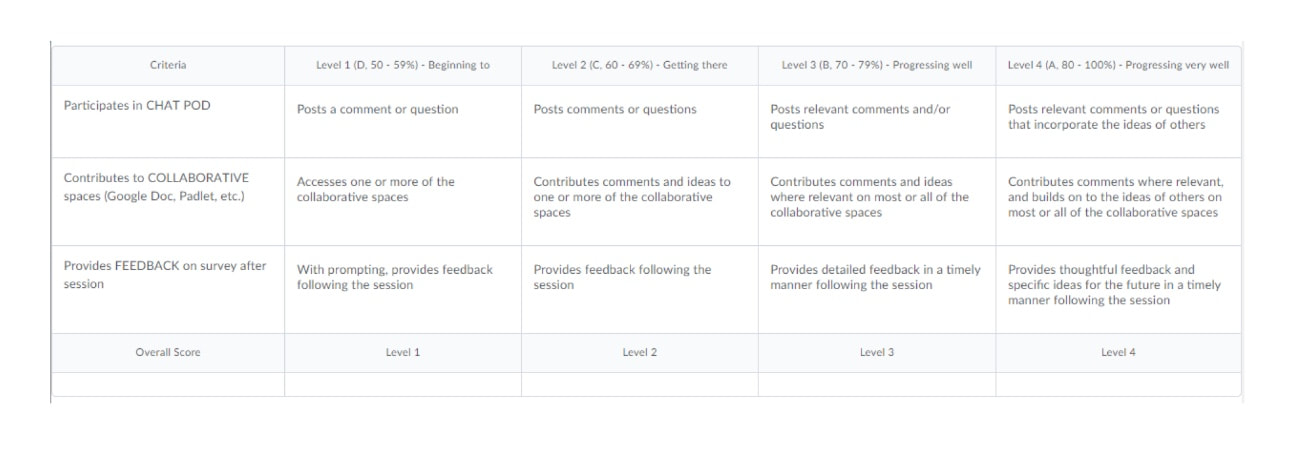

Since we currently still have a leveled (or graded) report card system, it is crucial that success criteria be further developed into gradients of achievement, then. That is, one must clarify (for oneself and the students) what different degrees of performance of each criteria look, sound and feel like.

This is helpful both for the students and their families as they consider their development along the way, and for classroom educators, who ultimately have to make a judgement based on the evidence of student learning, and then assign that collection of evidence a mark on the report card.

In order to practice our own skill with this, and to develop an example to use in an online workshop , a colleague and I recently used the rubric maker tool on the VLE to co-develop a rubric to assess and evaluate participation in our online workshop.

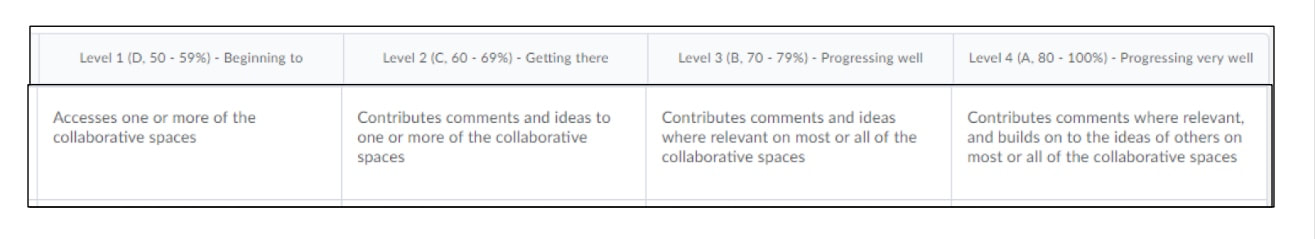

A participant who was just beginning to develop these skills might access the spaces (which we could assess for example by noting their presence in a shared Google doc), but might not yet contribute any posts. Another participant, one who was progressing well towards our goal of active online participation in the learning, might contribute many relevant ideas, whereas a participant who was participating to a higher degree would not only contribute relevant posts, but would also build onto the ideas of others, perhaps by contributing a relevant link, or a photo of someone else's ideas or questions in action.

Descriptive Feedback: A Formative Rubric

Although Growing Success (2010) tells us in no uncertain terms that feedback is an essential component in supporting student learning, we also know that feedback itself is not enough. John Hattie’s research tells us that in some cases feedback might even be detrimental. Or, as Stiggins says, “It’s the quality of the feedback rather than its existence or absence that determines its power.”

So it’s important to have an understanding of descriptive feedback as a tool for supporting learning. Positioning feedback within the assessment process, and basing it on the criteria one develops (or co-develops with students) to describe an already-established and public, curriculum-based learning goal ensures that we are using feedback effectively to support student learning.

So how, then, can a rubric leverage the power of descriptive feedback?

The use of descriptive feedback can go hand in hand with a rubric being used for formative assessment, that is, in the middle of the learning rather than at the end.

For example, a student currently demonstrating "level 2" in terms of online contributions in the isolated row of the sample rubric above should not be left (as my own poor students were 20 years ago when I was first messing around with rubrics!!) to puzzle out their own feedback from some random circles or check marks on a page. Rather, the feedback that accompanies such a statement could be something like this:

| You've contributed comments and ideas in our shared google docs and on the padlet. For our next online class, consider which places your comments are most relevant, and post them there. You might also want to read your peers' comments or look at their postings, think about how they apply to what we are learning, and respond with a comment or question to keep the conversation going and build on to the ideas of others. |

Once again singing the praises of the rubric maker on the VLE (why didn't I know about this thing when I was in a classroom??!!), this can all be pre-populated for a class in a rubric, and can still be customized for individual students where appropriate. Better yet, photos (of exemplars) can be included, AND feedback can be audio-recorded if some students are more inclined to listen than read!

A little short term pain in terms of time commitment for the teacher in setting up the rubric for long term gain (being able to use it effectively when assessing and evaluating later on).

Feedback AND Marks?

We know from Dylan William's research that just throwing a mark on a page (even it's virtual!) doesn't move student learning forward, and yet we are still currently (in Ontario at least) stuck with a reporting system that requires grades. My best advice, then, is to slim it down to four levels, quite possibly removing even the numbers, and replacing these with descriptors like "getting started", "almost there", "progressing well" and "progressing very well", or something to this effect.

How to interpolate the data gathered on a rubric like this and use it to inform final evaluation, or assessment OF learning, is a topic for another blog post. For now, suffice it to say that this method is one way to balance the desire to support student learning with the need to prepare for "marks" or "grades" later on.

Don't Wait Until the End!

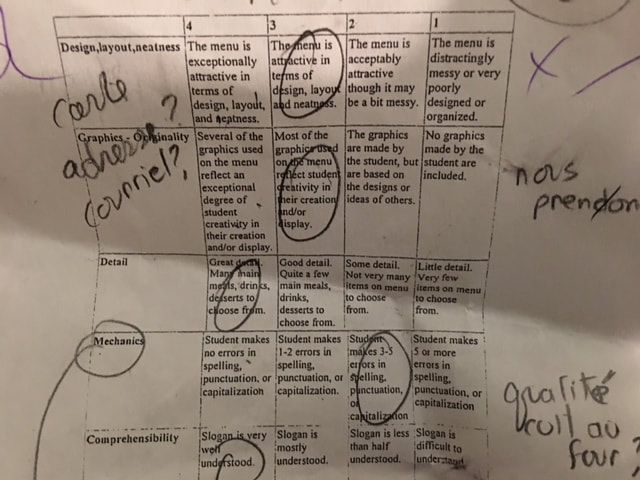

When using a rubric for formative purposes, i.e. to provide feedback along the way, timing is key. Don't wait until the end of a learning cycle to provide students with feedback. It's too little, too late then. (Just this week I saw evidence of this in my own household, where I found a small rubric in the garbage in my kids' room. Clearly, one of them had received this "feedback" on a final assignment; the location of the rubric indicated its value!)

If the same rubric is used throughout the learning, students have an opportunity to apply the feedback before the end of a term, semester or unit of study. (The elementary teacher in me wants to use a different colour each time one assesses progress, in order to see the growth over time.)

Then, when it goes home with an assignment at the end (either on paper or electronically), it's just one step in an ongoing and authentic process.

Rubric in the Workplace

With my renewed interest in well-designed rubrics to support learning and ease workload, my colleagues and I will be hard at work this week, designing an effective scoring rubric that will allow us to ascertain the "best man for the job", so to speak, for an upcoming project we are hopeful to get approvals for.

As an added bonus, should any applicant wish feedback later on, it can easily be provided, thanks to a detailed rubric, explicitly linked to the criteria communicated on the call for writers!

(Now, if we could only figure out how to use the VLE to score all the applications electronically....)

RSS Feed

RSS Feed